Intelligent Agent

Artificial Intelligence Chapter 1,2

Date: 23.02.28 ~ 23.03.12

Writer: 9tailwolf

Introduction

This Chapter deals with Introduction of AI. We Study about definition of Ai.

What is AI?

Definition of AI

There is a two approach to define AI, first is thought process, and second is reasoning. According to these two approach, AI can defined by four defination.

- System that think like human

- System that think like rationally

- System that act like human

- System that act like rationally

Rational Agent

An agent is something process that can recieve proceives and act. Abstractly, an agent is one of the function from percept histories to actions like below.

Our goal of agent is finding or making agent with the best performance.

Rationality depends onn four things.

- The performance measure that defines the criterion of success

- THe agent’s prior knowledge of the environment

- THe actions that the agent can perform

- The agent’s percept sequence to date

Test Environment

To design a rational agent, we must specify the task environment. Followings are a kinds of task environments.

- Performance measure : satisfy goal?

- Environment : State and Environment

- Actuator : What agent can do

- Sensors : What agent can get information of state

Property of Environment is also important, because according to property, the algorithm of agent will be changed. There are numerous of property.

- Fully observable : Partially observable

- Single agent : Multiagent

- Competitive : Cooperative

- Deterministic : Stochastic

- Episodic : Sequential

- Static : Dynamic

- Discrete : Continous

- Known : Unknown

Agent Types

Agent program implements the agent function taking percepts from sensors as input and return actions to actuators. It is architecture of agent. As a result, we can say agent consist of architecture and program. These are four basic types of agent in order of increasing generality.

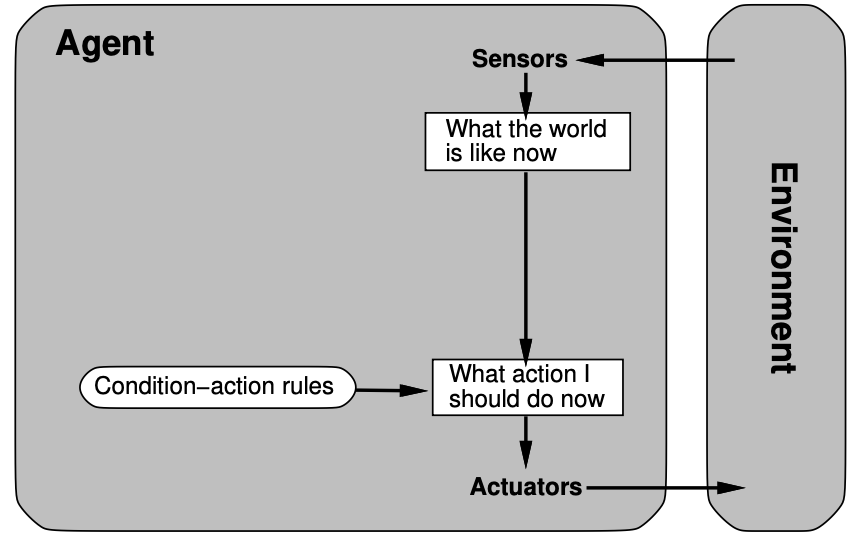

Simple reflex agent

Simple reflex agent is most simply structure of agent. Actions of agent is concluded by just condition.

def SRA(Percept):

state = input_interpret(Percept)

rule = Rule(Percept)

action = rule.action

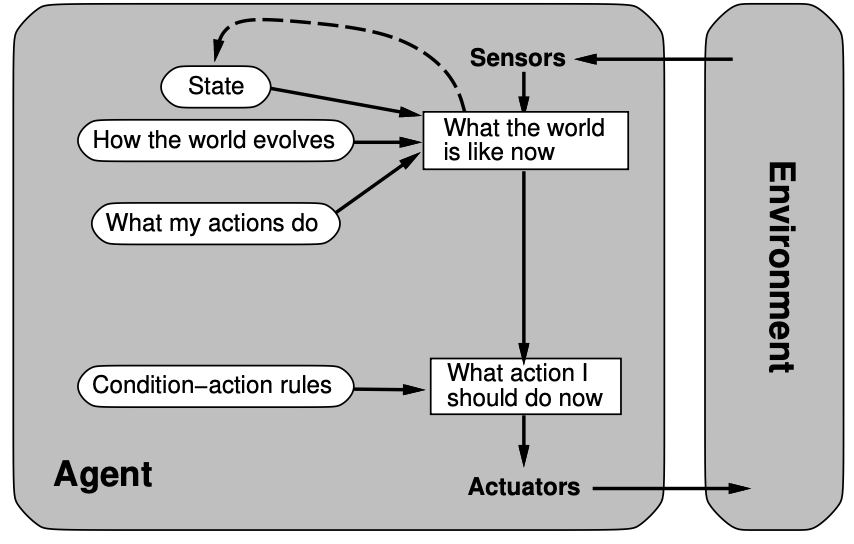

return actionModel Based Reflex Agent

Model Based Reflex Agent is good for partial observability state. Maintaining some sort of internal state depending on percept history, It can reflect some non-observable state.

def MBRA(location, state):

state = update_state(state,action,percept,model)

rule = Rule(state,rules)

action = rule.action

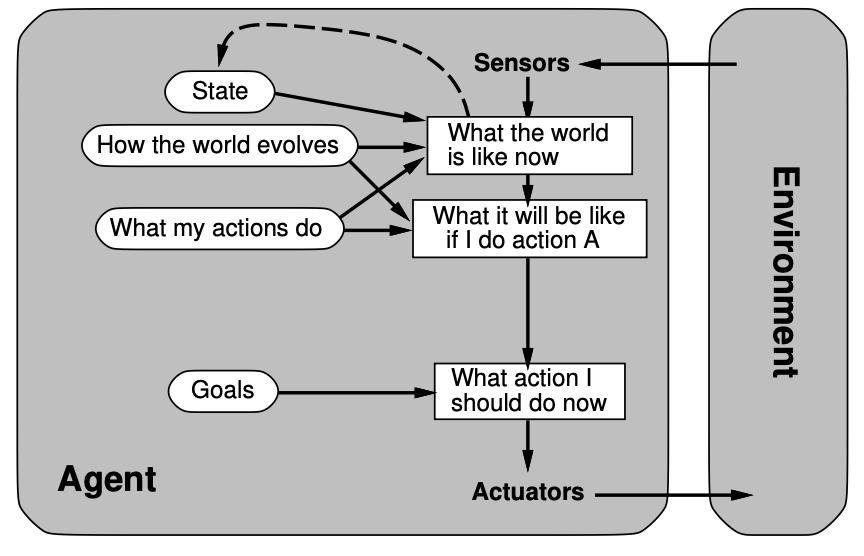

return actionGoal-Based Agent

Knowing something is not enough to choose what i do. By getting goal information, agent can make rational decision that can close to goal. Goal Based Agent is a algorithm that apply above.

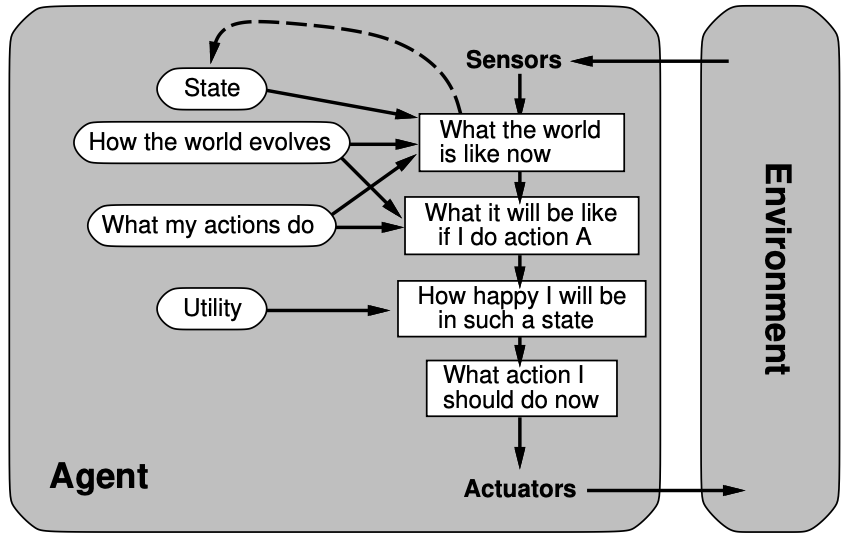

Utility-Based Agent

Goal is not enough to make more better action. goal based algorithm can find goal but the process of finding goal can be hard. Utility-Based Agent can make more utility decision.

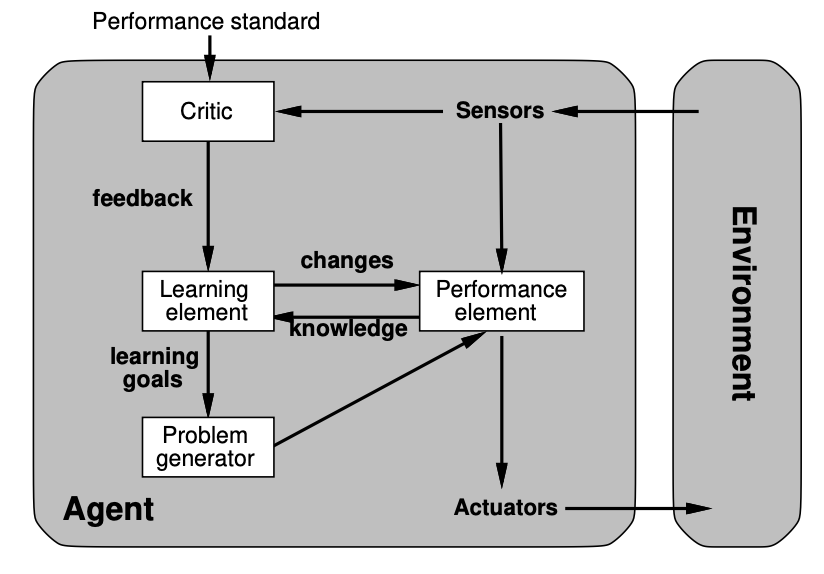

Learning Agent

There is no way to materialize agent program. Learning is good for every environment.

In learning agent, there is four element.

- Learning element

- Performance element

- Critic : Which is necessary because the percepts themselves provide no indicationof the agent’s success.

- Problem Generator : Which suggest action that shoud be connected to new experience.